iQ3Connect 2025.2 is here! We’re making XR training and work instruction creation easier, faster, and more powerful with new automation capabilities and AI/IoT integrations – so you can focus on what matters most: upskilling your workforce and improving operational efficiency. Key updates include Automated Experience Creation, a new Step Instruction Menu, and AI and IoT integration for work instructions, task verification, and digital twins. Explore the new capabilities of iQ3Connect 2025.2 below.

Training and Work Instructions

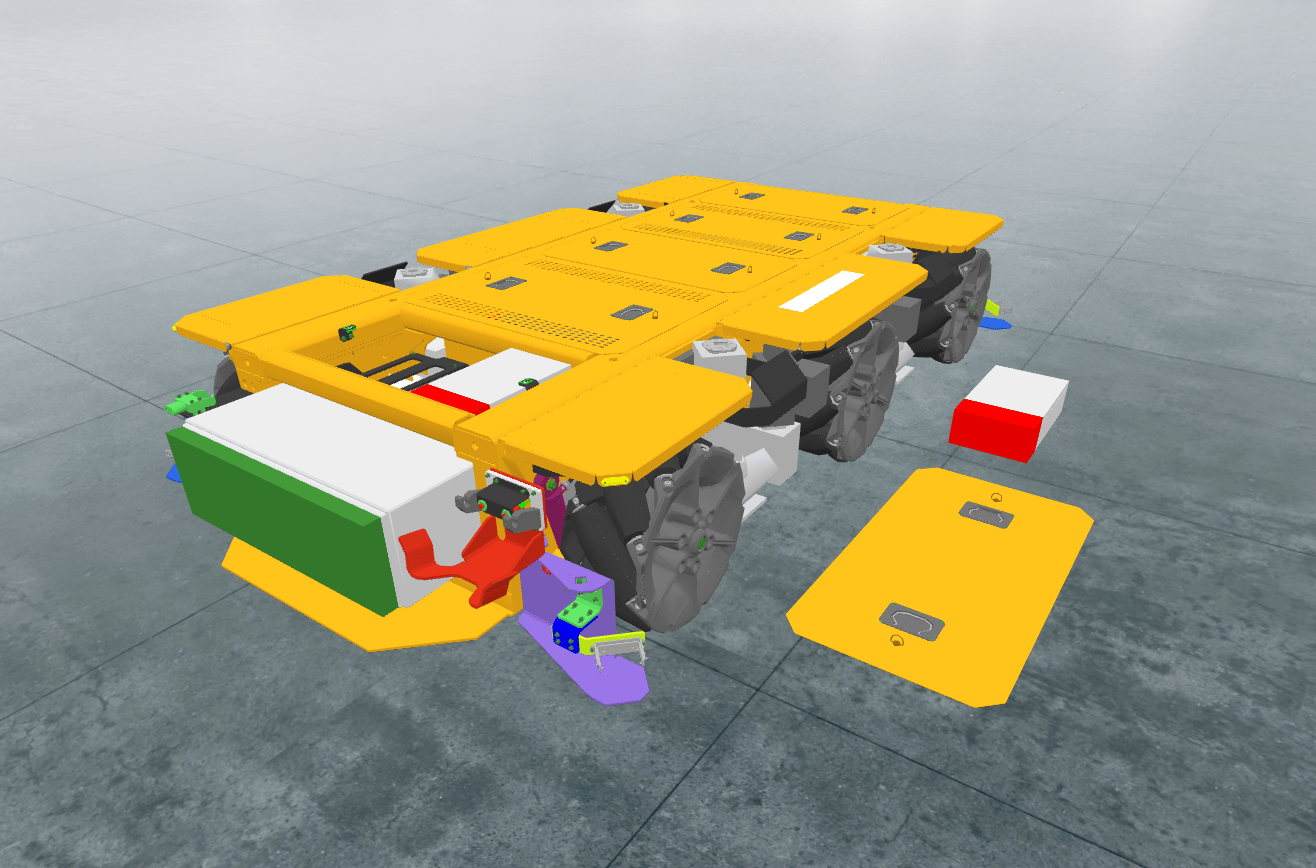

Automated Experience Creation: Automatically create XR training and experiences using the new Training Wizard or quickly duplicate existing content and structure using the new copy/paste functionality. The Training Wizard will automatically create a fully functioning XR experience from 2 or more States (i.e. Scenes/Steps). The Training Wizard can also be used to automatically add the Next/Back navigation buttons to a step-by-step experience. The new copy/paste functionality enables the existing content (actions) or structure (timelines) to be quickly duplicated.

Work Instruction Task Verification – AI + IoT Integration: Integrate artificial intelligence outputs and IoT sensor data into XR experiences. A new training action, External Signal Receiver, is now available which will listen for external data input and update the XR experience in real-time, either to report the data as-is or update the XR experience dynamically based on the content. These integrations are primarily targeted toward digital twins and work instruction task verification but can be applied to any use case.

Step Instruction Menu: The new Step Instruction Menu provides an improved UI that combines text information and menus/buttons. The Step Instruction Menu is completely customizable, from the colors, to the icons, to the number of buttons. The Step Instruction menu works in 2D, AR, and VR mode, whether mobile, tablet, PC, or HMD.

XR Action – Time Tracking: Capture the time it takes to perform a step (or any arbitrary sequence) using the new Time Tracking actions. An unlimited number of durations can be tracked, with each time duration stored as a data point within the experience. This data can be automatically passed to an LMS system or easily exported to 3rd party tools.

XR Action – Flash Object: A new training action that will cause the defined object to flash. The flashing can be customized to change the visibility, transparency, or highlighting of the object.

Animations in GLB files: The ability to play animations embedded in GLB files has been expanded to include new end animation behaviors: reset, stop, loop. Reset will reset the animated objects back to their initial position/configuration, stop will leave the animated objects in their position/configuration as set at the end of the animation, while loop will cause the animation to play continuously. These settings are included as part of the GLB Animation action.

Augmented Reality – Virtual Hands: When entering AR-mode on a head-mounted display (HMD), the virtual hands will no longer be shown by default. Instead, the virtual menus and guides will now be mapped directly to the user’s physical hands, increasing the user’s visibility of the physical space. As part of this change, there is a new setting that can be used to turn back on the virtual hands if preferred and set that as the default behavior.

Text Box Behavior Improvements: Easily display text to end-users in 3D environments with our new Text Box behavior: Scene-Follow. When set to Scene-Follow mode, text boxes will appear in front of the user and will gradually follow them around the 3D environment.

XR Performance Improvements: The lag and frame rate drops for animations and state transitions (i.e. LoadState actions) have been greatly reduced, leading to an overall smoother experience for the end-user.

Improved UI/UX for Experience Authoring: Some important elements of the experience creation process have been updated to provide an improved UI/UX. The Training Add Action menu has been reorganized to provide a more intuitive order, while the Create State and Add Timeline buttons have been moved to the relevant section header, eliminating the need to scroll to access these functions.

Reduced the Number and Frequency of the Movement Locked and Orientation Locked Messages: When an end-user is in an XR experience with the movement or orientation locked, they are automatically provided with a message when trying to move or rotate to inform them that their controls are locked. These messages were occurring too often which could disrupt the overall experience. Thus, the number and frequency of these messages has been greatly reduced.

Virtual Workspaces and Classrooms

Real-Time Digital Twins – AI + IoT Integration: Virtual workspaces can now be linked to data sources such as IoT sensors and AI output to create digital twins that are updated in real-time based on the real-world environment. These integrations are primarily targeted toward digital twins and work instruction task verification but can be applied to any use case.

Save Scene in 2D Menu: The Save Scene button has been added to the standard 2D menu, making it more readily accessible. The Save Scene button can now be found in the upper left corner menu: My Content > Scenes & Sessions, see image below. Note: It can still be found in the XR menu as well.

Enterprise Hub

Launch Workspace Improvements: Launching a Workspace has been streamlined to provide easier access to XR models and Workspace settings. Launching a Workspace directly from the Project Home screen will now automatically select all of the available XR models in that Project. Alternatively, entire folders can now be selected from the XR Models page when launching a Workspace. Finally, a new quick settings menu will appear when launching a Workspace which provides options to change the workspace duration, public/private setting, and menu enabled/disabled.

Project Management and Default Template Editing: The ability to manage and administer Projects has been expanded. The Default Project Template can now be edited. Project Templates can now be used to update Projects (individually or in bulk) even after the Project has been created. The background environment can now be customized as part of the Project Template.

UI/UX Improvements for Inviting Users to Projects and Accessing Multimedia: The UI/UX for inviting users to Projects has been greatly improved, making it easier to quickly invite users and understand what invitations are still pending. Additionally, the Multimedia page is now the first page shown when the External Assets tab is selected.

Announcements Readability Improvement: When an announcement is selected, it is now fully expanded as a pop-up to improve readability